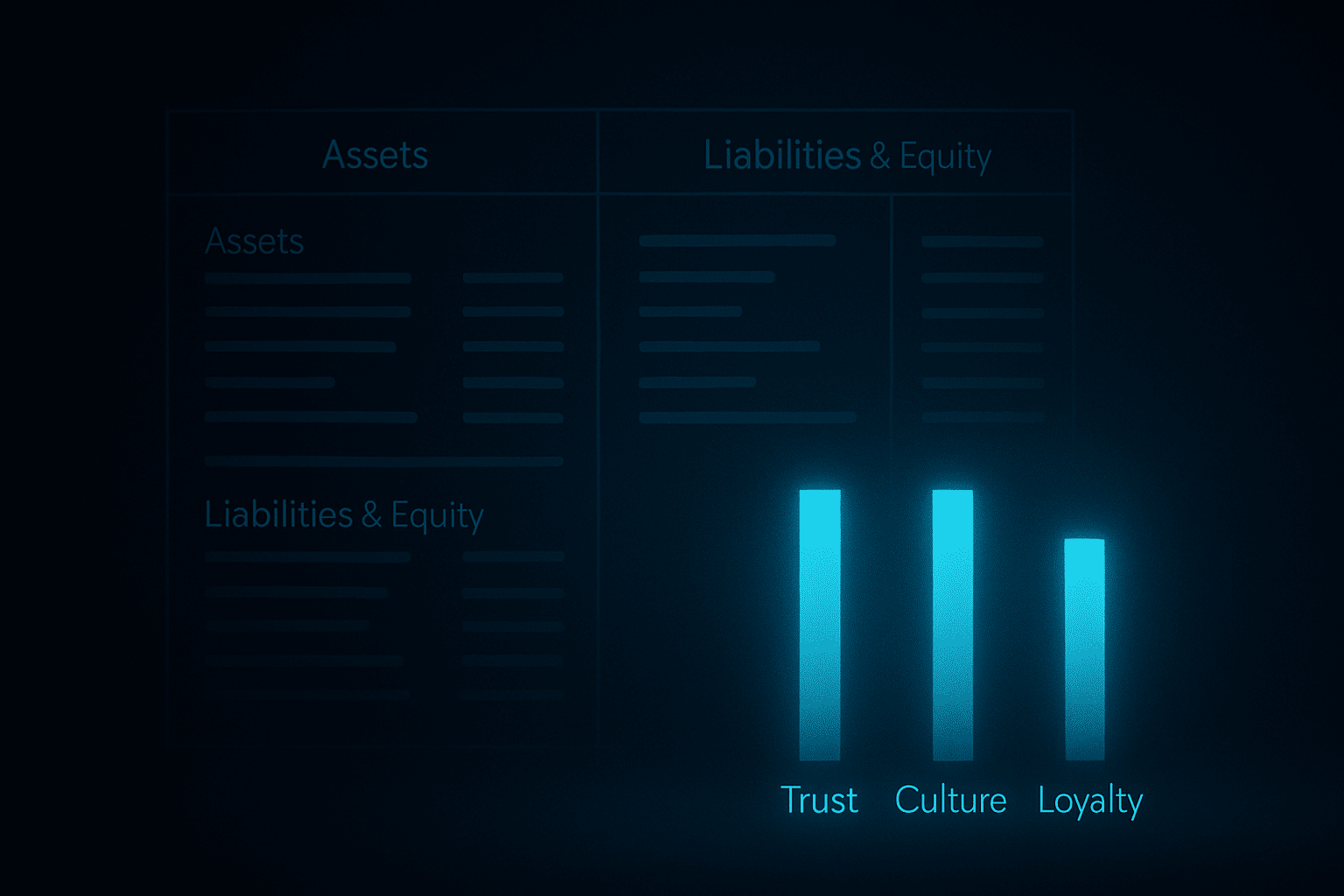

AI’s quick wins are easy to count. The hidden costs—trust, culture, and loyalty—rarely show up until the damage is done.

What leaders miss

Balance sheets celebrate speed and savings. They rarely surface reputational drag or cultural fatigue. When customers feel processed, not served, loyalty quietly declines—and regulators take note.

AI adoption isn’t just technical change; it’s a cultural intervention. Lead it that way.

Leader’s quick scan

- Can you explain high-impact model decisions in plain language?

- Are frontline teams empowered when automation fails?

- Is the data lineage audit-ready today?

- Do incentives reward speed and fairness?

Wise leaders count the costs that don’t appear in the quarter—because that’s where trust lives.

Hidden costs matrix

Opaque outcomes and weak recourse reduce confidence—even when KPIs look great.

If work loses meaning, engagement drops and attrition rises.

Untracked data flows and ambiguous accountability invite regulatory heat.

One viral misstep can erase the savings that justified the project.

Getting the facts right, too

Pair style-aligned coaching with RAG from curated sources to reduce hallucinations and drift.

- Curated sources: policy, knowledge base, approved vendors

- On-policy answers: filtered for tone, scope, brand

- Traceable: citations and logs for audit and improvement

Questions to price the invisible

- What behaviour will this AI change, and how will we measure it ethically?

- Where could shortcuts undermine fairness or customer dignity?

- Who is accountable when the model is confidently wrong?

- How will we repair trust if something goes sideways?

The SAFER compass

Strategic • Agile • Feasible • Ethical • Resilient—use this to choose what to automate, what to augment, and what to leave human.

Protect trust while you scale. Pilot with guardrails, then measure what matters.

Talk to us about SAFER AIDownload PDF